[GAN inversion]

vi image_inversion.py

mkdir noise

python image_inversion.py --resolution 256 --src_im human01.jpg --src_dir /disk1/human_examples/ --save_dir /disk1/human_examples --weight_file /disk1/zzalgun/pretrained_models/ffhq_256_rosinality.pt vi image_inversion.py

mkdir noise

python image_inversion.py --resolution 256 --src_im human01.jpg --src_dir /disk1/human_examples/ --save_dir /disk1/human_examples --weight_file /disk1/zzalgun/pretrained_models/ffhq_256_rosinality.pt

noise 저장하는 부분에서 에러남

latent_W 는 저장됨

import numpy as np

import matplotlib.pyplot as plt

from stylegan_layers import G_mapping,G_synthesis

import argparse

import torch

import torch.nn as nn

from collections import OrderedDict

import torch.nn.functional as F

from torchvision.utils import save_image

import torch.optim as optim

from torchvision import transforms

from PIL import Image

import PIL

device = 'cuda'

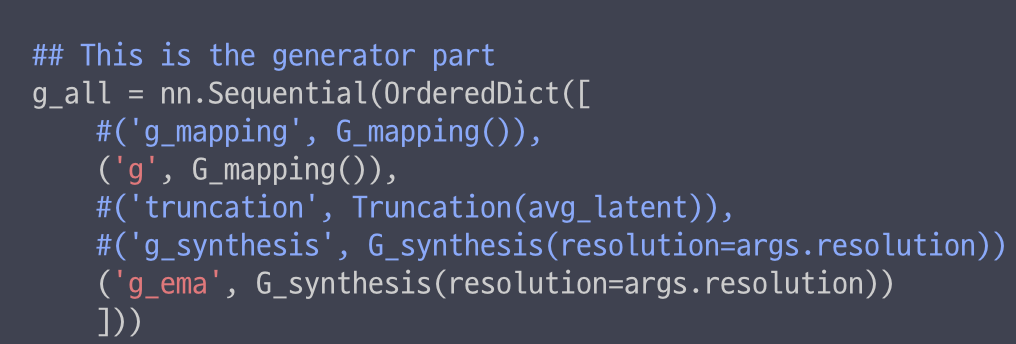

G = nn.Sequential(OrderedDict([

('g', G_mapping()),

('g_ema', G_synthesis(resolution=256))

]))

G.load_state_dict(torch.load('/disk1/zzalgun/pretrained_models/ffhq_256_rosinality.pt', map_location=device), strict=False)

G.eval()

G.to(device)

g_mapping, g_synthesis = G[0], G[1]

dlatent = np.load('./latent_W/human01.npy')

dlatent = torch.from_numpy(dlatent).to('cuda')

synth_img = g_synthesis(dlatent)

synth_img2 = (synth_img+1.0) / 2.0

save_image(synth_img.clamp(0, 1), "./test.png") │

#save_image(synth_img2.clamp(0, 1), "./test.png") │

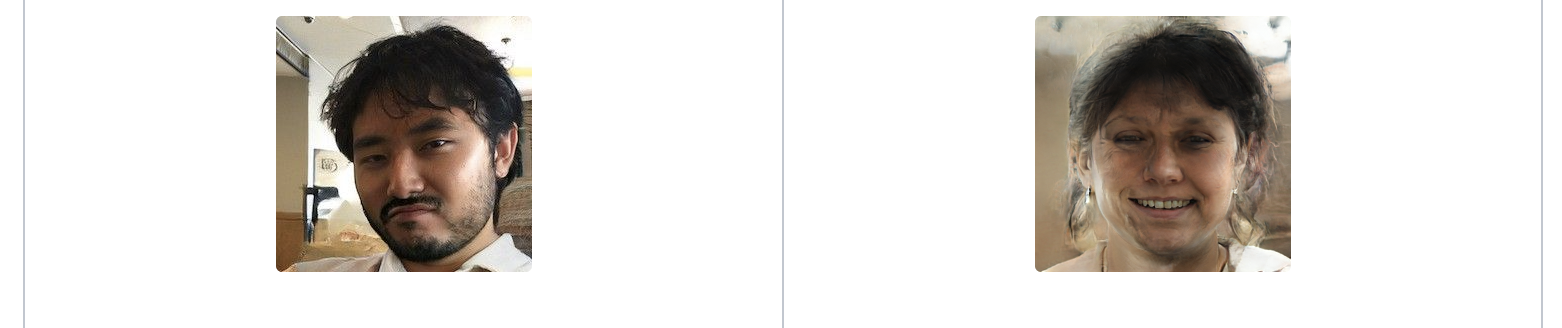

latent_W 폴더에 생성된 npy로는 이미지가 이상하게 생성됐는데, inversion 과정에서 저장한 snapshot은 꽤 괜찮다?

조금더 다뤄보면 될 듯.

[SOAT]

# lpip가 없어서 rosinality에서 가져옴

cp -r ../stylegan2-pytorch/lpips ./

# 라이브러리 추가 설치

pip install scikit-image

pip install kornia

python projector.py /disk1/human_examples/human01.jpg

inversion_stats.npz 파일이 생성되었고, inversion_codes/ 폴더 아래 human01.pt가 생성됨

Improving Inversion and Generation Diversity in StyleGAN using a Gaussianized Latent Space

Modern Generative Adversarial Networks are capable of creating artificial, photorealistic images from latent vectors living in a low-dimensional learned latent space. It has been shown that a wide range of images can be projected into this space, including

arxiv.org

human01.pt를 활용해서 이미지 복원하기

import util

from model import Generator

import torch

from PIL import Image

g_ema = Generator(256, 512, 8)

g_ema.load_state_dict(torch.load('/disk1/zzalgun/pretrained_models/ffhq_256_rosinality.pt')['g_ema'], strict=False)

g_ema = g_ema.to('cuda').eval()

source = util.load_source(['human01'], g_ema, 'cuda')

source_im, _ = g_ema(source)

def make_image(tensor):

return (

tensor.detach()

.clamp_(min=-1, max=1)

.add(1)

.div_(2)

.mul(255)

.type(torch.uint8)

.permute(0, 2, 3, 1)

.to("cpu")

.numpy()

)

img = make_image(source_im)

pil_img = Image.fromarray(img[0])

pil_img.save('./test_human01.png')

from collections import OrderedDict

emoji_generator = Generator(256, 512, 8)

ckpt = torch.load('/disk1/zzalgun/few-shot_results/ios_emoji/ffhq_ios_emoji_001000.pt')['g_ema']

# dict key가 안 맞는 문제가 있어서 수정

new = OrderedDict()

for key in ckpt.copy().keys():

val = ckpt[key] # pop 하면 삭제됨

key = key.replace('module.', '')

new[key] = val

emoji_generator.load_state_dict(new, strict=False)

emoji_generator = emoji_generator.to('cuda').eval()

source = util.load_source(['human01'], emoji_generator, 'cuda')

source_im, _ = emoji_generator(source)

img = make_image(source_im)

pil_img = Image.fromarray(img[0])

pil_img.save('./emoji_1000_human01.png')

python projector.py /disk1/human_examples/human02.jpg

###########################################################

import util

from model import Generator

import torch

from PIL import Image

from collections import OrderedDict

g_ema = Generator(256, 512, 8)

g_ema.load_state_dict(torch.load('/disk1/zzalgun/pretrained_models/ffhq_256_rosinality.pt')['g_ema'], strict=False)

g_ema = g_ema.to('cuda').eval()

source = util.load_source(['human02'], g_ema, 'cuda')

source_im, _ = g_ema(source)

def make_image(tensor):

return (

tensor.detach()

.clamp_(min=-1, max=1)

.add(1)

.div_(2)

.mul(255)

.type(torch.uint8)

.permute(0, 2, 3, 1)

.to("cpu")

.numpy()

)

img = make_image(source_im)

pil_img = Image.fromarray(img[0])

pil_img.save('./test_human02.png')

emoji_generator = Generator(256, 512, 8)

ckpt = torch.load('/disk1/zzalgun/few-shot_results/ios_emoji/ffhq_ios_emoji_003000.pt')['g_ema']

# dict key가 안 맞는 문제가 있어서 수정

new = OrderedDict()

for key in ckpt.copy().keys():

val = ckpt[key] # pop 하면 삭제됨

key = key.replace('module.', '')

new[key] = val

emoji_generator.load_state_dict(new, strict=False)

emoji_generator = emoji_generator.to('cuda').eval()

source = util.load_source(['human02'], emoji_generator, 'cuda')

source_im, _ = emoji_generator(source)

img = make_image(source_im)

pil_img = Image.fromarray(img[0])

pil_img.save('./emoji_3000_human02.png')

'인공지능 > computer vision' 카테고리의 다른 글

| Face Alignment (0) | 2022.08.12 |

|---|---|

| Audio Reactive styleGAN (pending) (0) | 2022.08.12 |

| GAN실험 - few-shot GAN adaptation (0) | 2022.08.12 |

| GAN실험 - AnimeGANv2 학습 (0) | 2022.08.12 |

| GAN실험 - FreezeG (0) | 2022.08.11 |