1주차

model = keras.Sequential([keras.layers.Dense(units=1, input_shape=[1])])

model.compile(optimizer='sgd', loss='mean_squared_error')- Dense : define a layer of connected neurons -> 여기는 dense 가 하나이므로, 레이어 하나에 single neuron

- input_shape = [1] : one value as input

- loss function을 통해 guess가 얼마나 좋았는지 안 좋았는지 optimizer가 판단

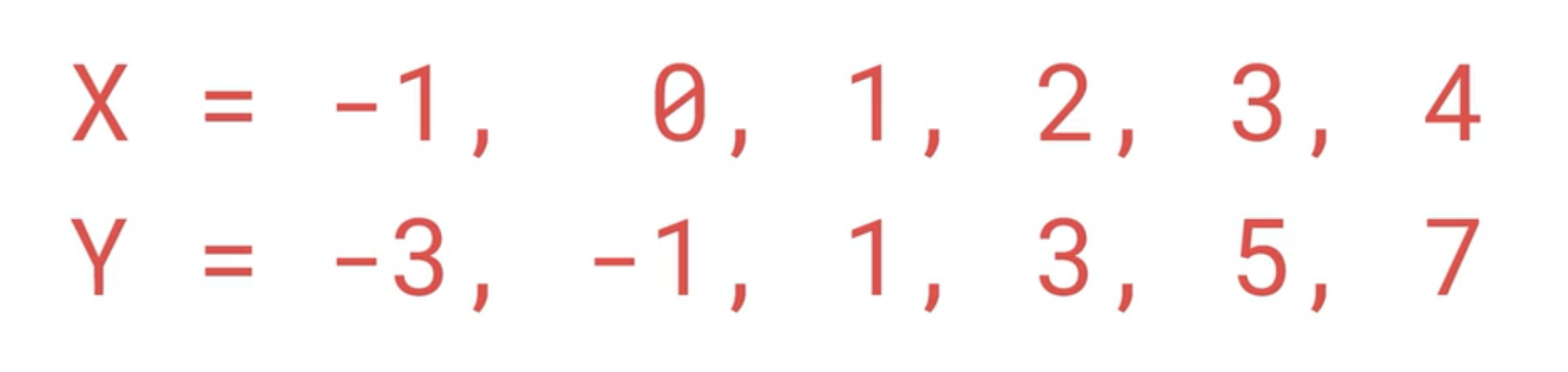

xs = np.array([-1.0, 0.0, 1.0, 2.0, 3.0, 4.0], dtype=float)

ys = np.array([-3.0, -1.0, 1.0, 3.0, 5.0, 7.0], dtype=float)

model.fit(xs, ys, epochs=500)- training

model.predict([10.0])- prediction

과제

import tensorflow as tf

import numpy as np

# GRADED FUNCTION: house_model

def house_model():

### START CODE HERE

# Define input and output tensors with the values for houses with 1 up to 6 bedrooms

# Hint: Remember to explictly set the dtype as float

xs = np.array([1.0, 2.0, 3.0, 4.0, 5.0, 6.0], dtype=float)

ys = np.array([1.0, 1.5, 2.0, 2.5, 3.0, 3.5], dtype=float)

# Define your model (should be a model with 1 dense layer and 1 unit)

model = tf.keras.Sequential([tf.keras.layers.Dense(units=1, input_shape=[1])])

# Compile your model

# Set the optimizer to Stochastic Gradient Descent

# and use Mean Squared Error as the loss function

model.compile(optimizer='sgd', loss='mean_squared_error')

# Train your model for 1000 epochs by feeding the i/o tensors

model.fit(xs, ys, epochs=1000)

### END CODE HERE

return model

# Get your trained model

model = house_model()

new_y = 7.0

prediction = model.predict([new_y])[0]

print(prediction)

2주차

fashion_mnist = tf.keras.datasets.fashion_mnist

(train_images, train_labels), (test_images, test_labels) = fashion_mnist.load_data()

model = keras.Sequential([

keras.layers.Flatten(input_shape=(28, 28)),

keras.layers.Dense(128, activation=tf.nn.relu)

keras.layers.Dense(10, activation=tf.nn.softmax)

])- 첫번째와 마지막 레이어 주목

- 첫번째 레이어 Flatten : 28*28 이미지를 linear array 로 바꿔줌

- 마지막 레이어 : 10개 클래스이므로 10개 뉴론 있음

- 중간(히든) 레이어

class myCallback(tf.keras.callbacks.Callback):

def on_epoch_end(self, epoch, logs={}):

if (logs.get('loss') < 0.4) :

print("\nLoss is low so cancelling training!")

self.model.stop_training = True- epoch 가 끝날 때마다 callback

- 현재 loss 가 logs에 있음

callbacks = myCallback() #instantiate the class

...

model.fit(training_images, training_labels, epochs=5, callbacks=[callbacks])

과제

import os

import tensorflow as tf

from tensorflow import keras

# Load the data

# Get current working directory

current_dir = os.getcwd()

# Append data/mnist.npz to the previous path to get the full path

data_path = os.path.join(current_dir, "data/mnist.npz")

# Discard test set

(x_train, y_train), _ = tf.keras.datasets.mnist.load_data(path=data_path)

# Normalize pixel values

x_train = x_train / 255.0

data_shape = x_train.shape

print(f"There are {data_shape[0]} examples with shape ({data_shape[1]}, {data_shape[2]})")

# GRADED CLASS: myCallback

### START CODE HERE

# Remember to inherit from the correct class

class myCallback(tf.keras.callbacks.Callback):

# Define the correct function signature for on_epoch_end

def on_epoch_end(self, epoch, logs={}):

if logs.get('accuracy') is not None and logs.get('accuracy') > 0.99:

print("\nReached 99% accuracy so cancelling training!")

# Stop training once the above condition is met

self.model.stop_training = True

### END CODE HERE

# GRADED FUNCTION: train_mnist

def train_mnist(x_train, y_train):

### START CODE HERE

# Instantiate the callback class

callbacks = myCallback()

# Define the model

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(128, activation=tf.nn.relu),

tf.keras.layers.Dense(10, activation=tf.nn.softmax)

])

# Compile the model

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

# Fit the model for 10 epochs adding the callbacks

# and save the training history

history = model.fit(x_train, y_train, epochs=10, callbacks=[callbacks])

### END CODE HERE

return history

hist = train_mnist(x_train, y_train)

3주차

model = tf.keras.models.Sequential([

tf.keras.layers.Conv2D(64, (3, 3), activation='relu', input_shape = (28, 28, 1)),

tf.keras.layers.MaxPooling2D(2, 2),

tf.keras.layers.Conv2D(64, (3, 3), activation='relu'),

tf.keras.layers.MaxPooling2D(2, 2),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(10, activation='softmax')

])- 첫번째 Conv : (3, 3) 크기의 64개 필터

- max-pooling : (2, 2) 크기의 pooling 필터

model.summary()

과제

import os

import numpy as np

import tensorflow as tf

from tensorflow import keras

# Load the data

# Get current working directory

current_dir = os.getcwd()

# Append data/mnist.npz to the previous path to get the full path

data_path = os.path.join(current_dir, "data/mnist.npz")

# Get only training set

(training_images, training_labels), _ = tf.keras.datasets.mnist.load_data(path=data_path)

# GRADED FUNCTION: reshape_and_normalize

def reshape_and_normalize(images):

### START CODE HERE

# Reshape the images to add an extra dimension

images = images.reshape(*images.shape, 1)

# Normalize pixel values

images = np.divide(images, 255.0)

### END CODE HERE

return images

# Reload the images in case you run this cell multiple times

(training_images, _), _ = tf.keras.datasets.mnist.load_data(path=data_path)

# Apply your function

training_images = reshape_and_normalize(training_images)

print(f"Maximum pixel value after normalization: {np.max(training_images)}\n")

print(f"Shape of training set after reshaping: {training_images.shape}\n")

print(f"Shape of one image after reshaping: {training_images[0].shape}")

# GRADED CLASS: myCallback

### START CODE HERE

# Remember to inherit from the correct class

class myCallback(tf.keras.callbacks.Callback):

# Define the method that checks the accuracy at the end of each epoch

def on_epoch_end(self, epoch, logs={}):

if logs.get('accuracy') is not None and logs.get('accuracy') > 0.995:

print('\nReached 99.5% accuracy so cancelling training')

self.model.stop_training = True

### END CODE HERE

# GRADED FUNCTION: convolutional_model

def convolutional_model():

### START CODE HERE

# Define the model

model = tf.keras.models.Sequential([

tf.keras.layers.Conv2D(64, (3, 3), activation='relu', input_shape = (28, 28, 1)),

tf.keras.layers.MaxPooling2D(2, 2),

tf.keras.layers.Conv2D(64, (3, 3), activation='relu'),

tf.keras.layers.MaxPooling2D(2, 2),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(10, activation='softmax')

])

### END CODE HERE

# Compile the model

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

return model

# Save your untrained model

model = convolutional_model()

# Instantiate the callback class

callbacks = myCallback()

# Train your model (this can take up to 5 minutes)

history = model.fit(training_images, training_labels, epochs=10, callbacks=[callbacks])

정답))

- A Conv2D layer with 32 filters, a kernel_size of 3x3, ReLU activation function and an input shape that matches that of every image in the training set

- A MaxPooling2D layer with a pool_size of 2x2

- A Flatten layer with no arguments

- A Dense layer with 128 units and ReLU activation function

- A Dense layer with 10 units and softmax activation function

4주차

from tensorflow.keras.preprocessing.image import ImageDataGenerator- 폴더 지정해주면 알아서 load 해서 labelling까지 해줌

train_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(

train_dir,

target_size = (300,300),

batch_size = 128,

class_mode = 'binary')- '이미지가 위치된 서브디렉토리'가 위치된 디렉토리를 지정해주어야 함

- 서브디렉토리의 이름이 라벨명이 됨

- load할 때 이미지 리사이징이 됨 (source data 자체는 바뀌지 않음)

test_datagen = ImageDataGenerator(rescale=1./255)

validation_generator = test_datagen.flow_from_directory(

validation_dir,

target_size = (300,300),

batch_size = 32,

class_mode = 'binary')

horses vs. humans

model = tf.keras.models.Sequential([

tf.keras.layers.Conv2D(16, (3, 3), activation='relu', input_shape=(300,300,3)),

tf.keras.layers.MaxPooling2D(2, 2),

tf.keras.layers.Conv2D(32, (3, 3), activation='relu'),

tf.keras.layers.MaxPooling2D(2, 2),

tf.keras.layers.Conv2D(64, (3, 3), activation='relu'),

tf.keras.layers.MaxPooling2D(2, 2),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(512, activation='relu'),

tf.keras.layers.Dense(1, activation='sigmoid')

])- 3개의 conv net

- input_shape 주목

- output layer 주목

from tensorflow.keras.optimizers import RMSprop

model.compile(loss='binary_crossentropy', optimizer=RMSprop(lr=0.001), metrics=['accuracy'])- binary classification --> binary crossentropy

history = model.fit(

train_generator,

steps_per_epoch=8,

epochs=15,

validation_data = validation_generator,

validation_steps=8,

verbose=2)- train_generator

- 총 1024개 이미지가 있고 128개 배치로 나눈 상태 -> 모두 load하기 위해서 8번 불러와야 함 (step_per_epoch)

import numpy as np

from keras.preprocessing import image

img = image.load_img(path, target_size=(300, 300))

x = image.img_to_array(img)

x = np.expand_dims(x, axis=0)

images = np.vstack([x])

classes = model.predict(images, batch_size=10)

if classes[0] > 0.5 :

print('human')

else:

print('horse')

과제

import matplotlib.pyplot as plt

import tensorflow as tf

import numpy as np

import osfrom tensorflow.keras.preprocessing.image import load_img

base_dir = "./data/"

happy_dir = os.path.join(base_dir, "happy/")

sad_dir = os.path.join(base_dir, "sad/")

print("Sample happy image:")

plt.imshow(load_img(f"{os.path.join(happy_dir, os.listdir(happy_dir)[0])}"))

plt.show()

print("\nSample sad image:")

plt.imshow(load_img(f"{os.path.join(sad_dir, os.listdir(sad_dir)[0])}"))

plt.show()from tensorflow.keras.preprocessing.image import img_to_array

# Load the first example of a happy face

sample_image = load_img(f"{os.path.join(happy_dir, os.listdir(happy_dir)[0])}")

# Convert the image into its numpy array representation

sample_array = img_to_array(sample_image)

print(f"Each image has shape: {sample_array.shape}")

print(f"The maximum pixel value used is: {np.max(sample_array)}")class myCallback(tf.keras.callbacks.Callback):

def on_epoch_end(self, epoch, logs={}):

if logs.get('accuracy') is not None and logs.get('accuracy') > 0.999:

print("\nReached 99.9% accuracy so cancelling training!")

self.model.stop_training = Truefrom tensorflow.keras.preprocessing.image import ImageDataGenerator

# GRADED FUNCTION: image_generator

def image_generator():

### START CODE HERE

# Instantiate the ImageDataGenerator class.

# Remember to set the rescale argument.

train_datagen = ImageDataGenerator(rescale=1./255)

# Specify the method to load images from a directory and pass in the appropriate arguments:

# - directory: should be a relative path to the directory containing the data

# - targe_size: set this equal to the resolution of each image (excluding the color dimension)

# - batch_size: number of images the generator yields when asked for a next batch. Set this to 10.

# - class_mode: How the labels are represented. Should be one of "binary", "categorical" or "sparse".

# Pick the one that better suits here given that the labels are going to be 1D binary labels.

train_generator = train_datagen.flow_from_directory(directory=base_dir,

target_size=(150, 150),

batch_size=10,

class_mode='binary')

### END CODE HERE

return train_generator# Save your generator in a variable

gen = image_generator()from tensorflow.keras import optimizers, losses

# GRADED FUNCTION: train_happy_sad_model

def train_happy_sad_model(train_generator):

# Instantiate the callback

callbacks = myCallback()

### START CODE HERE

# Define the model

model = tf.keras.models.Sequential([

tf.keras.layers.Conv2D(32, (3, 3), activation='relu', input_shape=(150, 150, 3)),

tf.keras.layers.MaxPooling2D(2, 2),

tf.keras.layers.Conv2D(32, (3, 3), activation='relu'),

tf.keras.layers.MaxPooling2D(2, 2),

tf.keras.layers.Conv2D(32, (3, 3), activation='relu'),

tf.keras.layers.MaxPooling2D(2, 2),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dense(1, activation='sigmoid')

])

# Compile the model

# Select a loss function compatible with the last layer of your network

model.compile(loss=losses.binary_crossentropy,

optimizer=optimizers.Adam(lr=0.001, decay=1e-6),

metrics=['accuracy'])

# Train the model

# Your model should achieve the desired accuracy in less than 15 epochs.

# You can hardcode up to 20 epochs in the function below but the callback should trigger before 15.

history = model.fit(x=train_generator,

epochs=20,

callbacks=[callbacks]

)

### END CODE HERE

return history

hist = train_happy_sad_model(gen)

'인공지능 > tensorflow certificate' 카테고리의 다른 글

| Sequences, Time Series, and Prediction(2) - Deep Learning (0) | 2022.08.22 |

|---|---|

| Sequences, Time Series, and Prediction(1) - mathematical method (0) | 2022.08.22 |

| Natural Language Processing in TensorFlow(2) (0) | 2022.08.21 |

| Natural Language Processing in TensorFlow(1) (0) | 2022.08.21 |

| Convolutional Neural Networks in TensorFlow (0) | 2022.08.19 |