Published 2022. 8. 28. 17:54

연습겸 전처리를 전혀 거치지 않고 BERT 모델 활용하는 예제를 그대로 썼다

모델 모양은 아래와 같다

m_url = 'https://tfhub.dev/tensorflow/bert_en_uncased_L-12_H-768_A-12/2'

bert_layer = hub.KerasLayer(m_url, trainable=True)

def build_model(bert_layer, max_len=512):

input_word_ids = tf.keras.Input(shape=(max_len,), dtype=tf.int32, name="input_word_ids")

input_mask = tf.keras.Input(shape=(max_len,), dtype=tf.int32, name="input_mask")

segment_ids = tf.keras.Input(shape=(max_len,), dtype=tf.int32, name="segment_ids")

pooled_output, sequence_output = bert_layer([input_word_ids, input_mask, segment_ids])

clf_output = sequence_output[:, 0, :]

net = tf.keras.layers.Dense(64, activation='relu')(clf_output)

net = tf.keras.layers.Dropout(0.2)(net)

net = tf.keras.layers.Dense(32, activation='relu')(net)

net = tf.keras.layers.Dropout(0.2)(net)

out = tf.keras.layers.Dense(1, activation='sigmoid')(net)

model = tf.keras.Model(inputs=[input_word_ids, input_mask, segment_ids], outputs=out)

model.compile(tf.keras.optimizers.Adam(lr=1e-5), loss='binary_crossentropy', metrics=['accuracy'])

return model

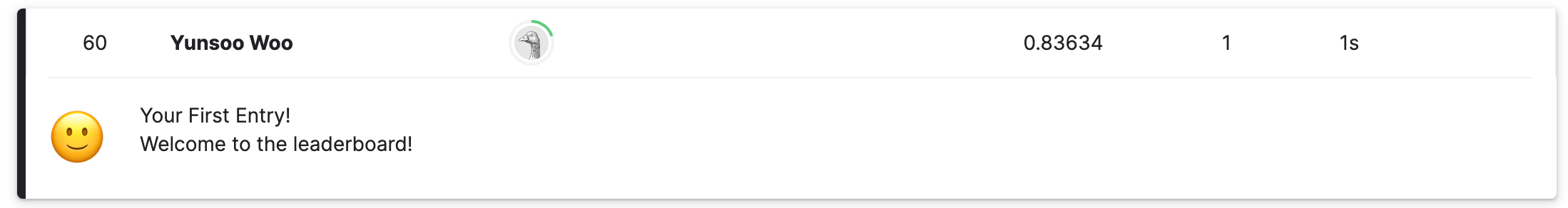

그 결과 702명 중 60위 - 상위 25%는 가볍게 들어가는 것 같다

'인공지능 > Natural Language Process' 카테고리의 다른 글

| [토이프로젝트] 트위터대답봇 만들기(2) (0) | 2022.08.30 |

|---|---|

| [토이프로젝트] 트위터대답봇 만들기(1) (0) | 2022.08.30 |

| Natural Language Processing with Classification and Vector Spaces: sentiment analysis with Naive Bayes(2) (0) | 2022.05.25 |

| Natural Language Processing with Classification and Vector Spaces: sentiment analysis with Naive Bayes(1) (0) | 2022.05.24 |

| Logistic Regression 퀴즈 풀이 (0) | 2022.05.22 |